Correctness of ML-datasets

Identifying mislabeled instances in AI-datasets

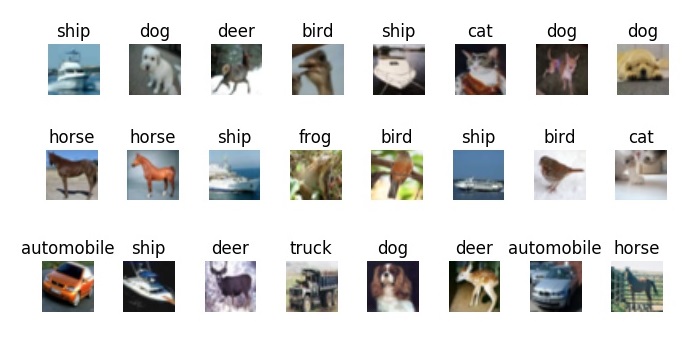

Machine learning requires data from which to learn: Even the most advanced neural network \(f\) is merely a mapping \(x \to y\), learnt from the training dataset. Thus, the correctness of training data is paramount. The figure below shows a popular image recognition dataset, the Cifar-10 dataset, which consists of inputs \(x\) (the images) and labels \(y\) (the associated classes).

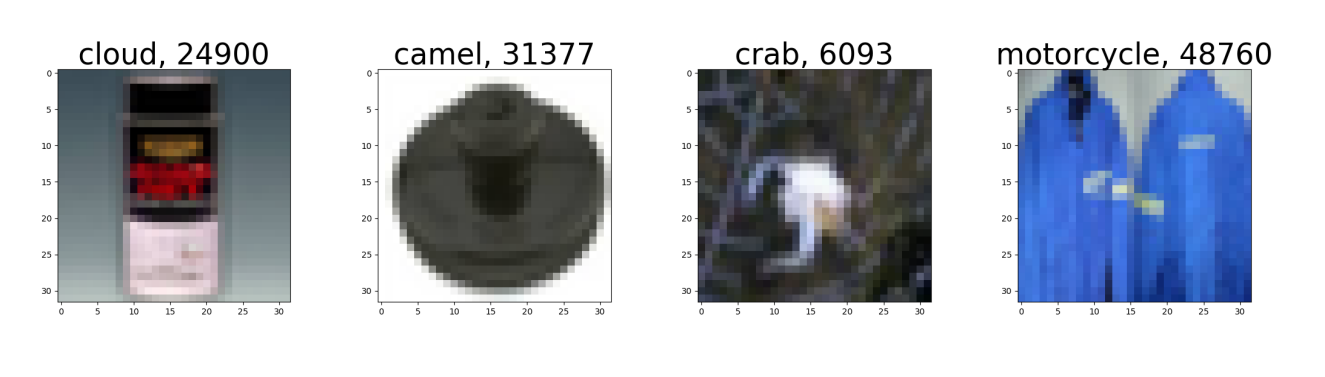

However, it turns out that even in the most popular datasets, there are a number of instances whose labels \(y\) are plain wrong. Consider the following examples from the Cifar-10 dataset, where the semantics of the image do not correspond to the label.

While the Cifar-10 dataset generally is very clean (we only found 7 mislabeled instances in 60.000 images), other datasets contain a much larger number of mislabeled samples. This is not just problematic for training, but also for evaluation, since it skews Accuracy and other metrics.

How can these mislabeled instances be found? Since the dataset is huge, manually inspecting all of the inidivual image/label pairs is not an option. Thus, we designed an algorithm to identify these mislabeled instances. You can find the technical description here, and the source code here.