Deepfake Detection

Try this inteactive challenge and see if you can spot the audio deepfake!

We saw earlier that deep learning is able to clone the voice of any given target, such as Angela Merkel. This raises the following questions:

- How well are humans fooled by these deepfakes?

- Can we use AI to detect audio deepfakes?

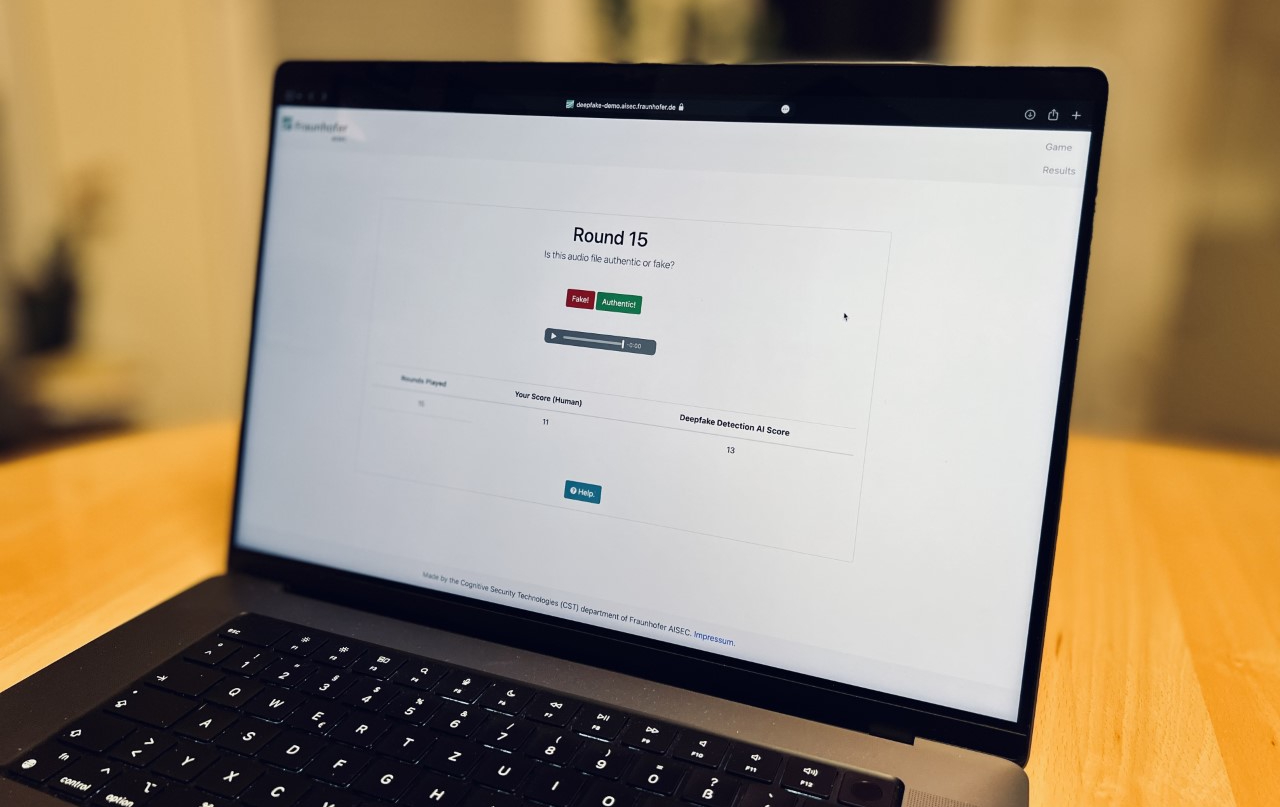

We conducted an online study, where we presented over 8900 audio files to 200 users. Each of the audio files was either authentic, or a deepfake. The users were tasked with classifying the audio correctly as either authentic or deepfake. You can try it yourself on this website. We then measured the performance of both the human players, as well as the performance of an AI specifically trained to detect audio deepfakes.

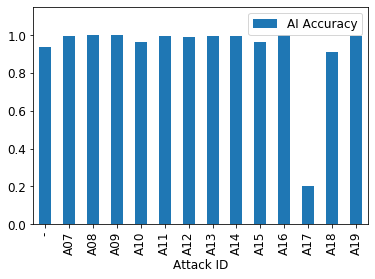

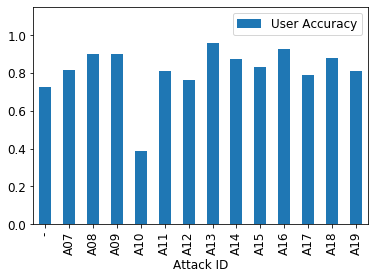

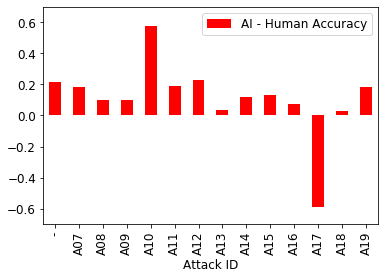

The following charts show the results:

The AI outperforms the human players in all but one attack scenario. We interpret these results as follows:

- AI has a clear advantage over humans in the domain of audio deepfake detection.

- Still, in some cases, humans outperform the AI.

For more information, read our paper, on which this blog post is based.